Don't Dismantle your Data Silos – Rewire them with Data Contracts, Visibility, and Automation

Transform data silos into interconnected networks with Witboost's approach to data contracts, governance, and automation for enhanced access,...

When it comes to modelling Data Products people go into panic. There are no clear rules, only some conceptual and not actionable indications.

When it comes to modelling Data Products people go into panic. There are no clear rules, only some conceptual and not actionable indications.

Below we’ll go through some rule of thumb we can follow when modelling data products.

To distribute the ownership of data modelling to multiple data teams, we need to define practical guidelines because not all of them can extract data modelling golden rules from the Data Mesh principles and apply them with consistency.

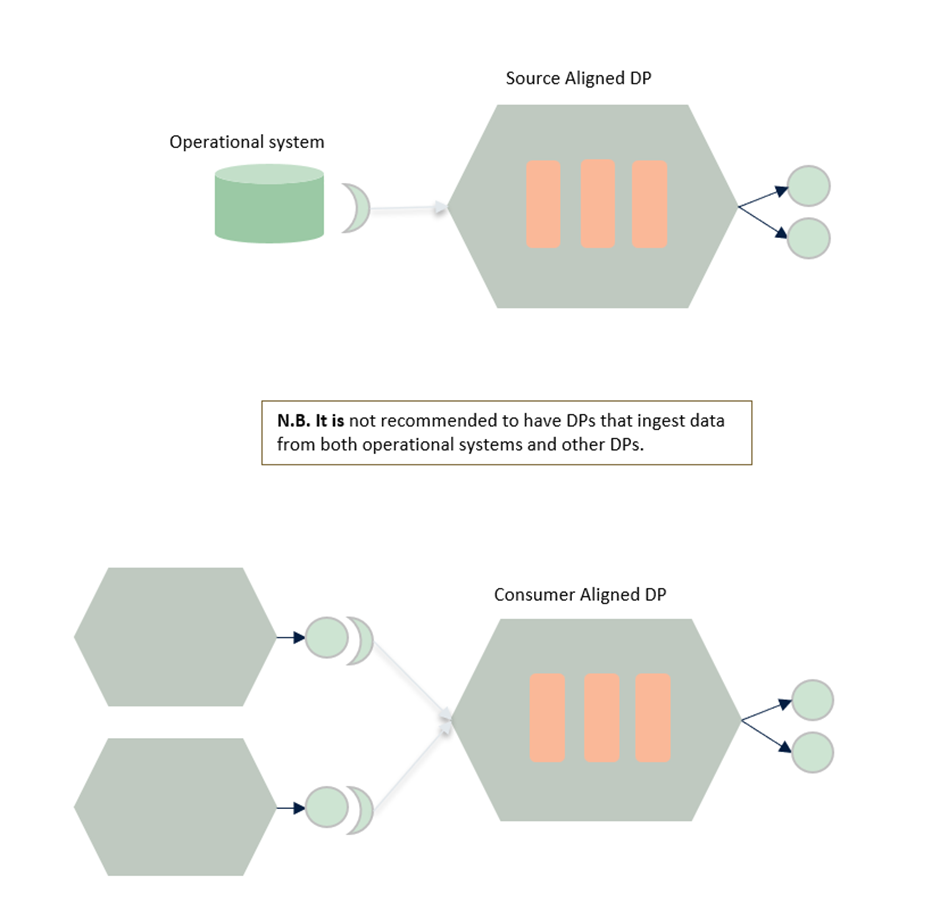

First of all, let’s talk about different kinds of data domains and Data Products. The Data Mesh reference book by Zhamak Deghani talks about three types of data domains:

When it comes to aggregated domain data, in the book, there is a side note (warning):

I strongly caution you against creating ambitious aggregate domain data — aggregate domain data that attempts to capture all facets of a particular concept, like listener 360, and serve many organization-wide data users.

Such aggregates can become too complex and unwieldy to manage, difficult to understand and use for any particular use case, and hard to keep up to date. In the past, the implementation of Master Data Management (MDM) has attempted to aggregate all facets of shared data assets in one place and one model. This is a move back to single monolithic schema modeling that doesn’t scale.

Data Mesh proposes that end consumers compose their own fit-for-purpose data aggregates and resist the temptation of highly reusable and ambitious aggregates.

I would take this warning to the next level: aggregate domain data do not exist.

There are several reasons for this ambitious declaration:

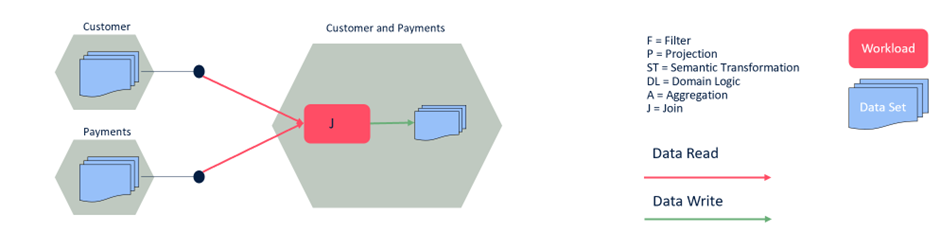

In the following scenario, “the customer and payments” Data Product is just the result of a join, merging of information from two different data domains, without any specific domain logic or semantic transformation. In my opinion, this is not even a Data Product because:

Aggregate domain data is not adding business value.

My suggestion is to rely only on source-aligned and consumer-aligned Data Products, with the following simple rule:

I suggest to double check also this article by Roberto Coluccio.

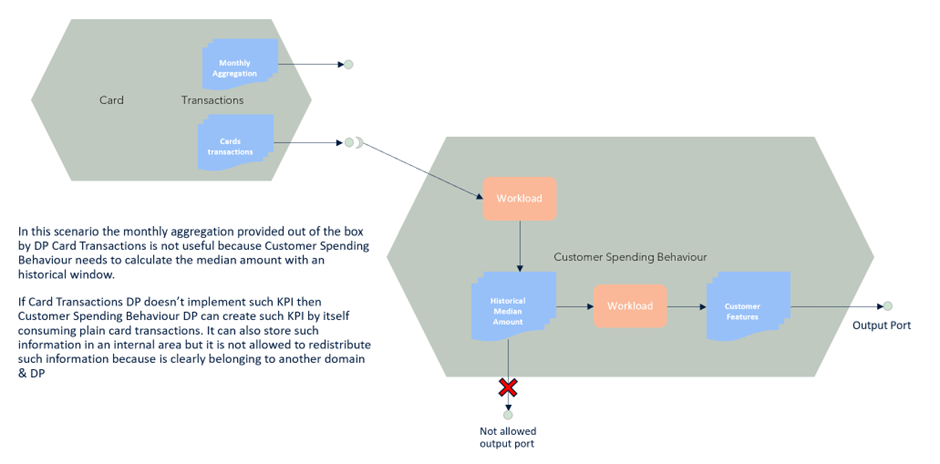

Another critical concept for Data Product modelling is the “no-redistribution” principle, introduced in Data Management at Scale (oreilly.com), by Piethein Strengholt.

This principle states that a domain should never redistribute data coming and belonging to another domain unless you are changing its semantic meaning. In DDD, when a piece of information crosses the boundaries between two domains and changes its importance, we are talking about context maps.

Data redistribution should be allowed and encouraged only if paired with context map information. Otherwise, consumers will get confused about who is the actual owner and master of a certain piece of information.

There is a vast difference between consuming and using specific information and exposing it. It should be allowed to copy and store information from other domains within a Data Product but not to share them through the output ports.

Suppose you copy the data from another domain (copy implies persisting it, not just using): in that case, you immediately become accountable for managing it according to compliance and security constraints because data duplication results in new ownership.

When you approach the data modelling of your Data Product it could be helpful to proceed in this way:

Another dilemma is about aggregations and denormalization. As we know, denormalization in DDD and Data Mesh is fine, but it is unclear if you need to create a new Data Product to implement it.

My short answer is no.

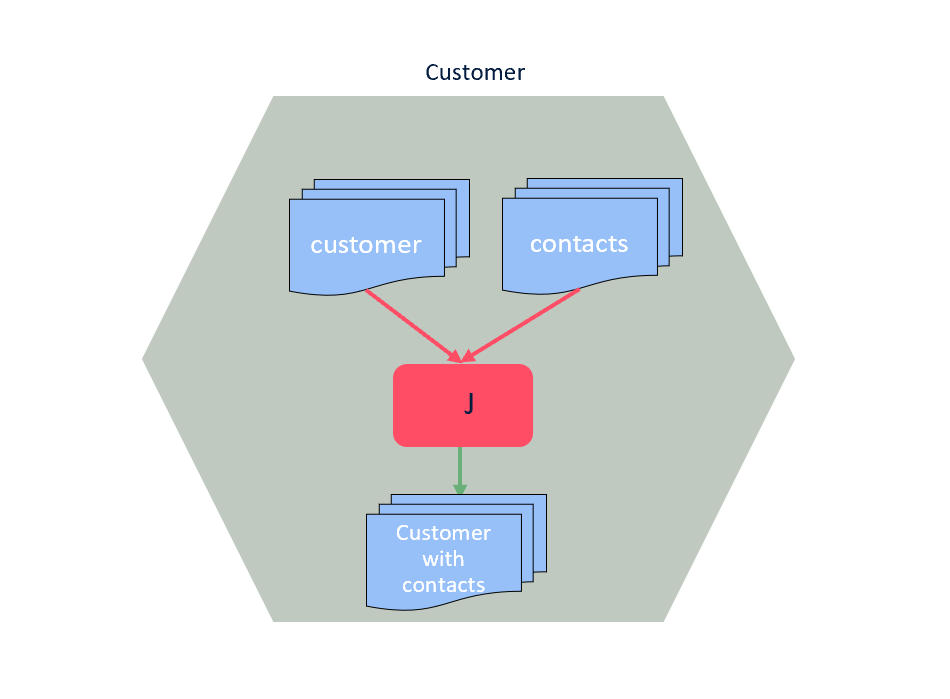

Until you are not creating new data and new knowledge, you should stay within the bounded context of the same Data Product.

This principle is valid only when you are managing the same piece of information.

We can say that a Data Product has two souls. When it reads data from other Data Products or external sources, it acts as a consumer, while when it writes data for output ports acts as a producer. This is useful to understand better the general rules in the end.

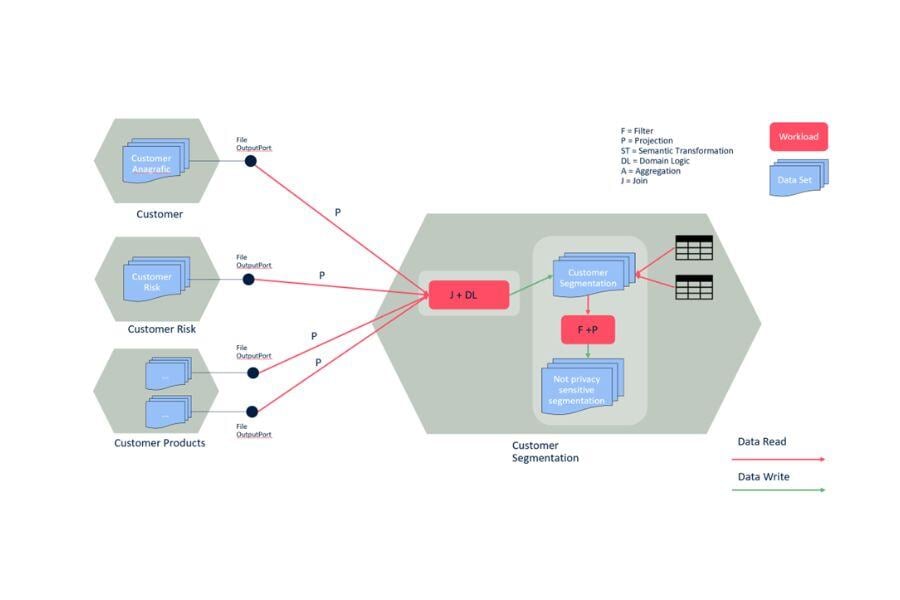

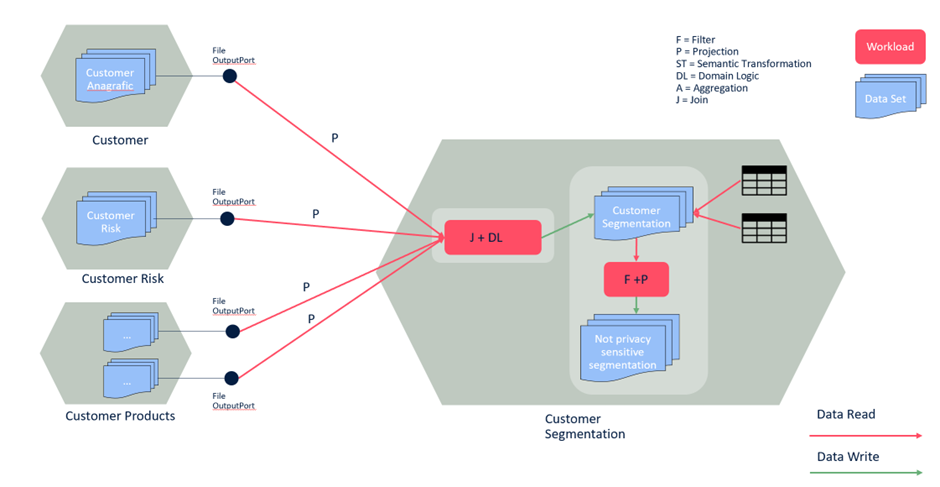

Suppose you are generating a new business concept that belongs to a specific domain (Risk). If you need to join multiple data domains and apply some semantic transformation (that is always hiding domain logic), then it is worth creating a new Data Product because you are creating a brand-new business concept. For example, in Risk Tranches we convert installments coming from in risk components, that is a semantic transformation.

Otherwise, if you are aggregating data that already belong to your Data Product, even if you apply specific domain logic, it means you are in the same bounded context. It means you have to add a new Output Port to the same Data Product, because probably you only need to apply some denormalization to make the data more consumer-friendly.

One question I always get is: “Do I need to create all the possible views of my data for my consumers?”

The short answer is no.

This question comes because today, to align not interoperable data silos, each data producer is exporting data sets.

The producer maintains several export processes and snapshots based on customer requirements. This pattern always leads to data integration, operations and maintenance burdens. This is totally against the product thinking that Data Mesh requires.

A product manager is not implementing features based on individual customer requests; the product manager tries to validate features against the addressable market to achieve product market fit.

When a producer is exporting data according to consumer requests (maybe coming from a different domain), there is a high risk that the producer will end up implementing some domain-specific logic or semantic transformation belonging to the consumer. This would be a clear violation of the domain-oriented ownership principle.

If we implement interoperability accurately, the consumer will probably be in the position to apply filters and projections on the fly by itself. The producer should be in charge of generating new output ports with embedded filters and projections only if it is required for security and compliance reasons because they are accountable.

These are the general rules we can apply to the Data Product modelling.

We applied these rules successfully in several enterprise and complex contexts and I hope they will help to elevate the Data Mesh practice.

Transform data silos into interconnected networks with Witboost's approach to data contracts, governance, and automation for enhanced access,...

Explore four data contract implementation patterns for complex environments, emphasizing proactive measures to prevent data issues and maximize...

Data Mesh is completely changing the perspective on how we look at data inside a company. Read what is Data Mesh and how it works.