How to identify Data Products? Welcome “Data Product Flow”

How do we identify Data Products in a Data Mesh environment? Data Product Flow can help you answer that question.

Learn how to build a robust data fabric using data products to enhance metadata quality, governance, and integration across your organization.

The vision of a data fabric—a seamless layer for automating data integration, delivering real-time insights and unifying data across an organization—is an ambitious goal.

Data Fabric is mainly an architecture and does not require a practice transformation, however, implementing a successful data fabric requires solving several foundational issues first. The most critical are metadata quality and completeness.

Yet, metadata quality does not improve in isolation. It depends on an ecosystem where ownership, governance, and standards are embedded in data management. This is where data products come into play. They are not just a building block but the foundation of a true Data Fabric, enabling metadata quality, standardization, and architectural consistency.

Data Mesh vs Data Fabric is a heavily debated topic but they have completely different drivers and are complementary. Let's explore how Data Products can be considered the first step towards a real Data Fabric.

In this piece, we'll discuss data products as a foundational block for metadata and architecture, data product management platforms, business ontologies, knowledge graphs, a roadmap that covers the entire process, and how to recognize whether you have a data fabric.

Let's dive in:

Data products are reusable, well-defined solutions that align data with business objectives. They come with clear ownership, governance, and metadata standards, ensuring that every information domain is treated as a product with a clear purpose and lifecycle. Here’s why they are the essential starting point for any data fabric initiative:

Metadata quality is a never-ending challenge in large enterprises, often due to fragmented responsibilities, technologies, and inconsistent documentation. Data products address this challenge by:

Every data product has a dedicated owner, responsible for maintaining high-quality metadata and ensuring it is aligned with business needs.

Data products require consistent documentation, which drives standardization and reduces ambiguity in data management.

Without data products, metadata remains fragmented and ad hoc, making it impossible to build the automated intelligence required for a data fabric.

Data products also enforce architectural patterns that simplify and standardize data integration. These include:

By establishing these patterns, data products provide the architectural foundation for scaling a data fabric.

Some claim that a Data Fabric solution sits directly on top of the operational data sources. Viewing the Data Fabric serving real-time insights to the user is completely unrealistic for the following reasons:

Operational Data very often doesn't contain analytical dimensions (i.e. historical changes) and requires data modelling and preparation.

Analytical workloads on operational data stores (i.e. mainframe consumption) aren't suitable for such workloads.

So a proper analytical foundation must be there.

Data products should have standardized change management processes that deeply connect data and metadata updates. Also, breaking changes in a data product-centric environment are managed with a certain standard and process.

A Data Fabric solution cannot survive misalignment between the actual data and the metadata and cannot absorb breaking changes without impacting users.

Adopting a rigorous Data Product Lifecycle is fundamental to allowing the Data Fabric to reach its full potential and avoid becoming a boomerang.

In this scenario, viewing a Data Product as the promotion of a data asset into a Data Catalog/Marketplace leads to a disaster.

With data products in place, enterprises can focus on improving metadata quality, which is essential for the data fabric to function. Metadata allows the fabric to:

However, without the standardization and ownership enforced by data products, metadata often remains incomplete or inconsistent, undermining the fabric’s potential.

Incomplete metadata leads to errors, crashes and erroneous answers to the user, with low awareness of why something isn't working.

Enterprises need a data product management platform to operationalize data products and ensure their scalability and effectiveness. This platform is the operational hub for creating, managing, and governing data products. Its core roles are:

This platform also lays the groundwork for the next phase: automating the creation of the data fabric. By standardizing metadata and architectural patterns, the platform enables the data fabric to consume data products effortlessly, automating integration and mapping.

A Data Product Management Platform empowers teams to create and manage data products independently, reducing bottlenecks while maintaining central governance.

Just deciding to adopt the Data Product practice does not guarantee success, because Data Products deliver their promise only if implemented at scale in the right way and with real IT governance.

For a data fabric to provide intelligent automation, it must understand the business context of data. This is achieved through a business ontology, which makes the relationships between business concepts machine-readable.

By linking data products to the business ontology, metadata becomes more prosperous and more meaningful.

The ontology allows the fabric to interpret data in context, resolving ambiguities and enabling more accurate integration.

For example, in retail, a business ontology might define relationships such as “A ‘customer’ places an ‘order’ that contains ‘products.’” Linking data products to this ontology ensures the data fabric can understand and process these relationships automatically.

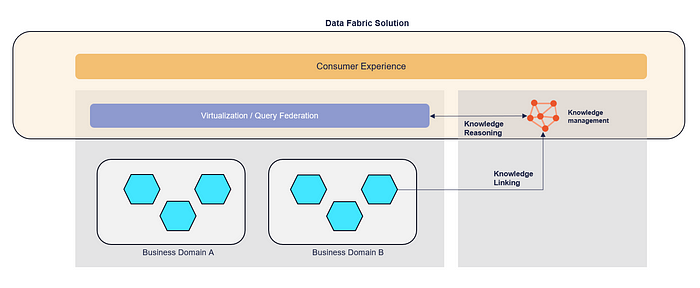

To operationalize the data and metadata created by data products and the link with the business ontology, enterprises need advanced knowledge graph solutions powered by Virtualization, while at the same time not creating another silo in a graph database.

A knowledge graph powered by Virtualization allows the data fabric to:

A knowledge graph, built on the business ontology, enables the data fabric to:

The data fabric can deliver dynamic, real-time, and context-aware integration capabilities by combining virtualization with semantic understanding.

There are two patterns, virtualization/federation, that you can use in your target and overarching architecture:

Pro: Decoupling

Cons:

.png?width=1494&height=1102&name=image%20(7).png)

Pros:

Cons:

.png?width=1426&height=1120&name=image%20(8).png)

A deeply automated virtualization layer is recommended, in both the Data Product and also in the Data Fabric solution, to better decouple the process of data creation and serving, and the conversion of data in user insights.

Here’s the short version of how enterprises can build a data fabric, starting with data products:

Many a vendor has started to jeopardize the Data Fabric concept in the market. Use these 3 questions to discover if it's an actual Data Fabric:

If the answer to the first parts of the questions is always yes, there's a good chance that you have a real Data Fabric solution. If there are caveats like those in the second parts and the follow-up questions, then be careful as it could be just marketing positioning.

A true Data Fabric is not built overnight, nor can it succeed without strong foundations. Data products are the cornerstone of this effort, creating the ownership, metadata quality, and architectural patterns required to scale. By embedding governance, linking data to business ontologies, and adopting advanced virtualization techniques, enterprises can transform the Data Fabric vision into reality.

Remember, the path to a Data Fabric is iterative and cannot be approached as a big bang. In addition, the Data Fabric layer must be automated and governed by the self-service platform.

How do we identify Data Products in a Data Mesh environment? Data Product Flow can help you answer that question.

Explore the power of synthetic data in Data Mesh architecture. Learn how to leverage Tonic.ai in Witboost for automating data operations.

Explore four data contract implementation patterns for complex environments, emphasizing proactive measures to prevent data issues and maximize...